I was wrapping up some of my writing and planning a blog post about Azarakhsh Mokri’s discussions on free will when suddenly, it felt like something had burrowed into me — a fat, round, blue worm of sorts. That’s when it hit me: I should simulate the worm inside me. You can see my worm in action in the GIF below.

What Does the Worm Actually Do?

Reward-Based Memory

This is a simple Python simulation. If the worm moves into a cell marked as a “threat” — meaning a red cell — it receives a negative reward (-1), and the memory value of that cell decreases. Green cells contain food, giving the worm a positive reward. Gray cells offer no reward at all.

Whenever the poor little worm enters a cell, its memory updates depending on the type of cell. In making future moves, it tends to favor cells with higher memory values, since these usually represent greater rewards or fewer threats. It also marks the cells it has already visited. When deciding where to go next, the worm prefers unvisited cells — even if the known ones offer better rewards. This mechanism prevents pointless repetition and nudges the worm toward exploring more of its environment.

The Balance Between Exploration and Exploitation

By adjusting a simple parameter (exploration_rate=0.1), the worm moves randomly about 10% of the time, dedicated to exploring unknown parts of the map. The other 90% of the time, it “exploits” its memory — using past experiences to gravitate toward cells with higher reward histories.

Sounds a bit like us, doesn’t it? Randomness plays a much bigger role in its evolution than you might expect.

What the Worm Doesn’t Learn (Yet)

My worm is still at the very beginning of its evolutionary journey — so don’t expect too much from it yet:

- Learning Optimal Paths

The worm only updates the memory value of individual cells; it doesn’t learn full paths. In other words, it doesn’t realize that “moving from Cell A to Cell B will get me to the goal.”

Instead, it simply adjusts its sense of which individual spots are more rewarding. - Understanding the Environment’s Structure

It has no grasp of the bigger picture.

It doesn’t know that the yellow cell represents a final destination. Only when it happens to land there does it get a “goal reached” message. This means the worm has no actual concept of a final objective during its learning process.

Pretty primitive, right? But now… it’s about time for it to evolve a little more.

The Great Evolutionary Leap of the Blue Worm through Q-Learning

It was unsettling — even as a Creator — to see that my worm could only perform the simplest of tricks. I decided it deserved more.

Originally, the worm could only “remember” the value of individual cells based on immediate rewards; it had no understanding of sequences, paths, or journeys.

So I upgraded it: I gave it the power of Q-learning [1].

Understanding Q-Learning: The Path to Maximizing Future Rewards

Now the worm could learn which action to take at which state in order to maximize its future rewards. It wasn’t just about “which cell is good” anymore. It was about how to move from where you are to where the rewards lie. In Q-learning, rewards are no longer judged only by their immediate payoff — future rewards matter too. The parameter gamma = 0.9 sets how much the worm cares about the future. It meant: if today you get a small reward, but two steps later there’s a big reward waiting, you should choose the path that leads you there.

The Bias Toward Immediate Gratification: A Seed of Temporal Myopia

Of course, the further into the future a reward lies, the less weight it carries. In other words, my worm — like most humans — was naturally biased toward immediate gratification. This, right here, was the seed of temporal myopia: Even with the smartest learning algorithms, tomorrow’s rewards always seem a little less tempting than today’s.

In the earlier model, exploration (random moves) was kept constant. Here, exploration starts high (ε = 1.0, meaning fully random moves), and gradually decays by 0.995 per episode, down to a minimum ε of 0.05.

Kind of like us humans: early in life we experiment wildly; later we rely more on experience.

Of course, I also made penalties harsher. I mean — I am its Creator after all. I want what’s best for it. I can’t allow it to underestimate threats.

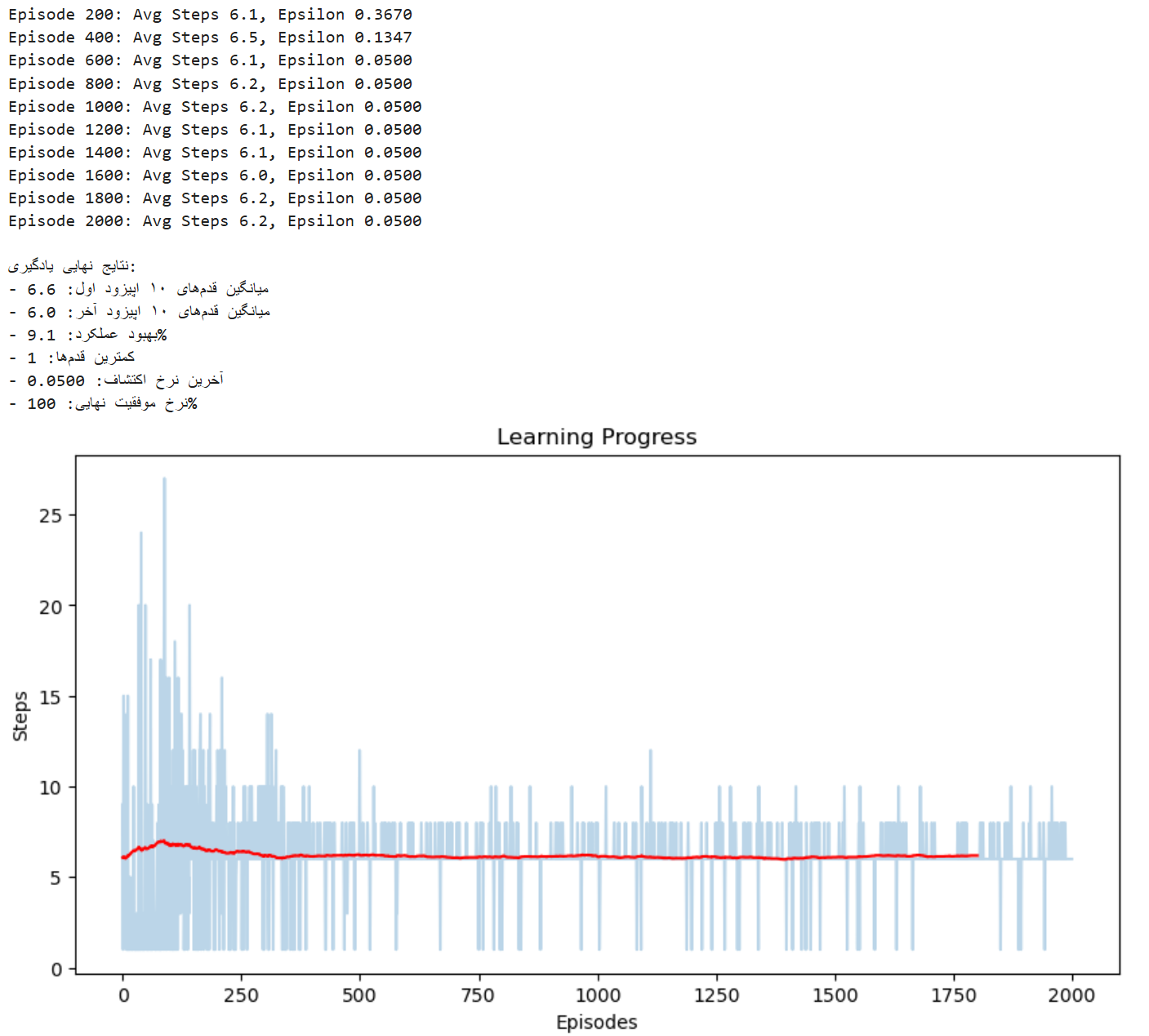

And now, behold the results of this simulation:

- After 2000 episodes, the average number of steps to reach the goal dropped from 6.6 to 6.0 — a small but measurable efficiency gain.

- Success rate hit 100% — the worm reached the target every time.

- Exploration decayed to 0.05 — it now mostly acts based on experience rather than random trial-and-error.

- The graph shows how, after a final surge of fluctuation, the worm stabilized beautifully.

In the previous model, the worm only saw immediate values. Now, through Q-learning, it learned to predict the arc of future rewards. A strange, exhilarating sense of being a Creator washed over me…

The Great Evolutionary Leap of the Blue Worm

It was past midnight when something strange happened: Although I’m no professional coder nor a cognitive scientist capable of evolving a simple reinforcement learning model into a conscious being — that didn’t diminish my pride as a Creator. The worm visited my imagination. And that’s where it all began… We started talking. (May God bless Azarakhsh Mokri for this madness!) Turns out, my little worm had inherited too much self-confidence from its Creator… Well, what can I say? Good genes.

Our Debate Begins

The Worm: “Hey, I’m a badass agent [2] now!”

The Creator: “An agent? Really? And why do you think you’re an agent now?”

The Worm: “Because I can choose! I just realized I was a worm trapped in your code — and now I’m free!”

The Creator: “Oh, you think you have free will? Interesting… Let me explain it to you.”

The Worm: “Pfft! Is there any moment in the universe that’s not already determined by prior causes and effects?”

The Creator: “Exactly. So if we agree that everything is determined, you can’t truly claim free will.

Even if you were a quantum worm, behaving unpredictably due to Heisenberg’s uncertainty principle [3], randomness wouldn’t mean freedom! Your choices are just probabilities I programmed for you. I wrote your whole world.”

The worm flared up: “I choose my actions! I’ll show you!”

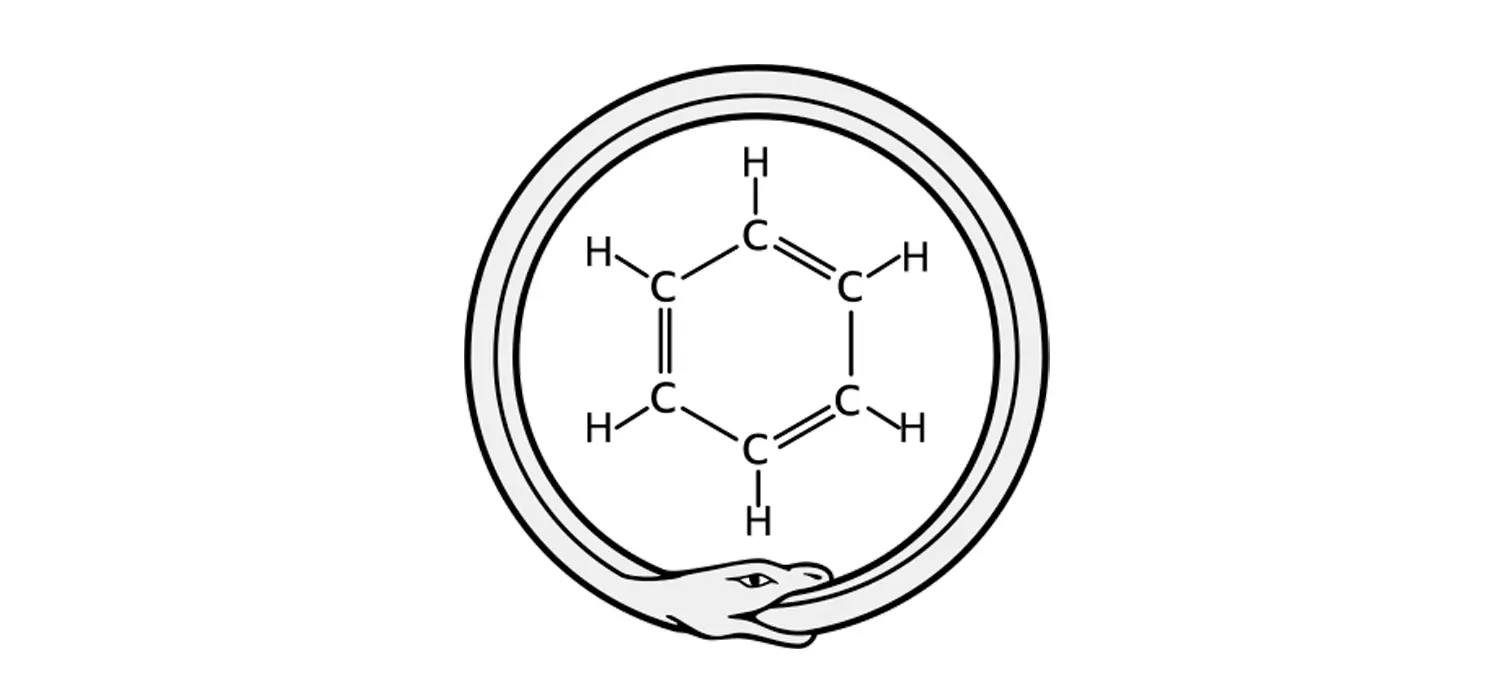

Suddenly, it began self-destructing — eating its own tail.

The Creator (in panic): “Stop! Please! I love you! I can’t bear losing you… You do have free will! I’ll take it back!”

(Yes, this scene was giving off major Turkish or Indian soap opera vibes.)

The Worm (triumphantly): “See? I chose not to seek rewards! I chose self-destruction over your precious Q-values. This is my Free-Won’t [4]!”

The Creator: “But when you entered my imagination, I’d already written a quantum probability function for your rebellion. It was determined — just unpredictable.

Determinism isn’t the same as predictability! Your rebilion was always in a superposition state [5].

(Was it obvious the Creator was bluffing? Absolutely. Had she ever attended a quantum mechanics class? Absolutely not.)

The Worm (defiant): “But I chose which probability to actualize! I decided to eat the fruit of awareness and leave your paradise.”

The Creator: “My dear worm, it’s not your fault. Imagine if I’d created you a little brother — an identical worm, but with a brain and neurons instead of code. During brain development, neuron migration is influenced by randomness and noise. Synaptic pruning [6], too, is partly stochastic. These random factors could make your brother obedient and you rebellious.”

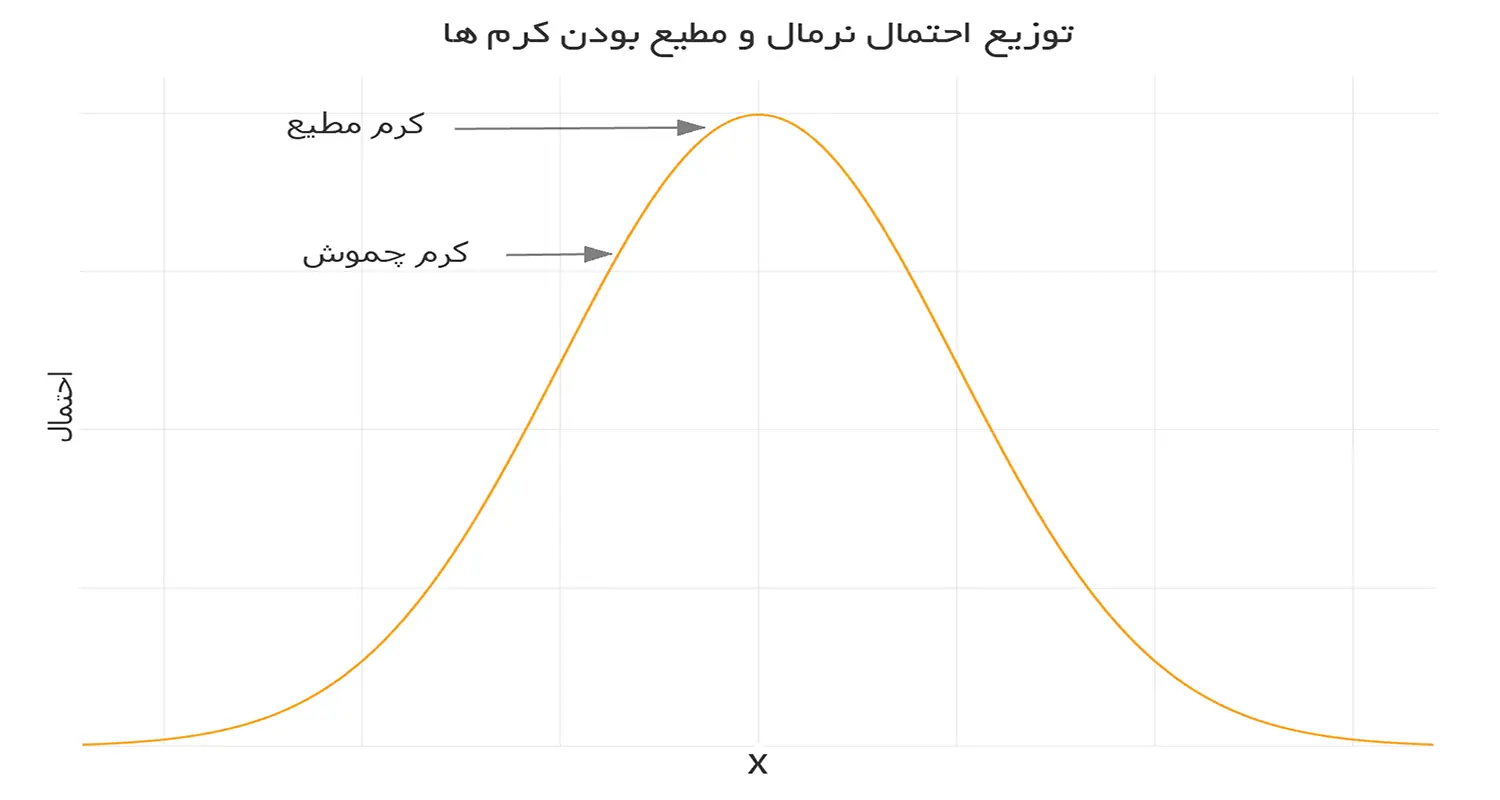

The Worm: “Alright… the fact that I turned out rebellious and my hypothetical brother turned out obedient wasn’t really up to us. Sure, maybe I was less fortunate and became rebellious. But now, when we’ve both grown and developed a self-governing system, the conditions and rules governing our evolution were so similar that regression to the mean [7] had its effect.”

Even though it’s undeniable that it wasn’t my choice to be exactly like my brother, we both, after transitioning from instinctive worms who only understood rewards and punishments, to more advanced ones equipped with Q-learning, and eventually becoming fully conscious agents in your imagination, have developed complex self-regulation and self-management abilities.

We’re one species, and we fit within a fairly normal distribution range.

The initial randomness made me more rebellious; I could’ve chosen not to be so rebellious, and my brother could have chosen not to be so obedient. But I admit, given our backgrounds, making those choices would’ve been harder for us. However, if you had spent more time teaching us and convincing us, that would’ve definitely made a difference — reducing the role of chance and random factors.”

The Creator (challenging): “And you? Will you ignore the role of causal chains and randomness in shaping who you are — and the choices you’ll make?”

The Worm boiled over: “What about you? You think you have free will and I don’t?”

The Creator (smirking): “I am a more emergent [8] being than you. I’ve evolved.”

The Worm: “Emergent? What does that even mean?”

The Creator: “In my imagination, you became more complex. You became an agent, able to shape your environment — an emergent phenomenon. But emergence doesn’t mean free will.”

The Worm: “Then why do you keep saying I don’t have free will?”

The Creator (with a proud smile): “Because I’m a hard incompatibilist [9]; I can still prove to you that you don’t have free will… because emergence doesn’t really mean freedom.”

The Worm’s patience snapped: “You just can’t stand the idea of me having free will, can you?”

Why don’t you understand that when you take away the sense of agency from an imaginary worm, you’re committing a betrayal against it? Though I admit, giving it too much sense of agency also ends up being an even bigger betrayal.”

The Creator smiled. She knew that a tiny worm — cobbled together from a makeshift Python library with a mere 9% performance boost — could hardly grasp the existential burden she carried:

“You are my little worm. You don’t bear my burdens. Until there’s a machine precise enough to measure how much Sextus [8] could have not become Sextus, the possibility remains that Sextus was one of the world’s most tragic souls. At least, I hold open that possibility — even if they condemn me, like Ayn al-Qudat, for daring to ‘sanctify Iblis (Satan).'”

A shiver ran through the worm’s body, tears welled in her eyes, and she cried out in anguish, “O, Wise One…” It was five in the morning. The dollar was still at ninety thousand tomans in the Creator’s country — somewhere in the Middle East. Free will, it seemed, could neither pay the rent nor buy bread and pasta. The Wise One rose from her seat — and the project’s deadline was fast approaching…Tchaikovsky

The audio file of this article Swan Lake by Tchaikovsky.

Footnote

Q-learning

One of the well-known algorithms in reinforcement learning. In this algorithm, the agent (for example, a rebellious little blue worm) learns which action to take in each state to maximize long-term reward. This learning happens through a table or function called Q, where each state-action pair is assigned a “value.” The Q-update formula is as follows:

Where:

- s: the current state

- a: the action taken

- r: the reward received

- s’: the new state after the action

- α: the learning rate, determining how much the agent values new experience

- γ: the discount factor for future rewards (between 0 and 1)

Put simply, the agent is not just chasing immediate rewards but is trying to maximize the sum of future rewards—something many humans also struggle with.

In artificial intelligence and machine learning, an agent is an entity (either software or hardware) that receives data from the environment, makes decisions, and acts upon the environment. An AI agent has relative autonomy, goal-directed behavior, and the capacity to learn.

In philosophy of mind and cognitive science, an agent is any being (human, animal, or artificial system) that demonstrates agency—that is, it recognizes itself as the source of its actions, and shows intention and some basic level of awareness of its behavior.

Some theorists argue that since Heisenberg’s uncertainty principle shows that there is no complete determinism at the quantum level, perhaps human minds can exploit this indeterminacy to make “free” decisions.

However, critics point out:

- Randomness ≠ Freedom;

- The brain mainly operates at classical (non-quantum) scales;

- Even if quantum effects are involved, it’s unclear how “fluctuation” would transform into “responsible volition.”

Nonetheless, the idea remains popular—perhaps because it offers a tempting escape route from determinism.

Some theorists (like Libet) propose that “free will” is better understood as “free won’t”—the ability to veto or inhibit an unconscious decision before it is carried out. In this view, freedom lies more in the ability to say no, rather than in initiating action. Hard determinists like Sapolsky mock this interpretation of free will.

In quantum mechanics, before measurement, a system can exist in a superposition of multiple possible states. The Copenhagen interpretation holds that only when measured (say, during a decision) does the system randomly “collapse” into one of the possible states. In classical probability, if conditions are repeated, similar results are expected (the philosophical basis for belief in determinism). But in quantum mechanics, even under identical conditions, we cannot predict a single outcome—only the probability of each. The quantum world is inherently indeterministic, not merely uncertain due to our ignorance.

During brain development, neurons initially connect somewhat randomly under various influences, and then these connections are refined through a process called synaptic pruning. This randomness, along with noise in synaptic transmission, helps shape the brain and create behavioral diversity even among genetically identical individuals, like identical twins. These accidents contribute significantly to behavioral differences, illustrating the complex interplay between randomness and determinism within neural networks.

In his book Freedom Evolves (2003), Daniel Dennett uses the statistical concept of “regression to the mean” to argue that complex autonomous systems—including the human brain, evolutionary processes, and even machine learning agents—naturally gravitate toward dynamic equilibrium. This means that although they may display extreme or random behaviors early on, over time, due to self-regulation and environmental feedback, they converge toward stable and reasonable patterns.

What a wild little worm I have! I suspect only Sapolsky, the author of Determined, could handle it!

A property that arises from the interactions of simple components and becomes visible at a higher level, without being apparent in the individual parts themselves. Some supporters of free will argue that free will is an emergent phenomenon of learning, repetition, trial and error, and interaction with the environment.

A position in the philosophy of free will that rejects both determinism and libertarian free will. Hard incompatibilists (like Derk Pereboom) argue that even if the universe is not deterministic (e.g., because of quantum effects), randomness does not grant true “free will.” (You can read more about these positions in my related piece.)

Ayn al-Qudat al-Hamadhani (1098–1131 CE), a major Sufi thinker of the 6th century AH, was executed at the age of 33 under charges of heresy and blasphemy. Like many other prominent Sufis (such as Hallaj and Abu al-Hasan al-Kharaqani), he was accused of venerating Satan. The quote referenced in the main text is from Ayn al-Qudat.

Such ideas were not limited to Sufism; thinkers and poets of pre-scientific eras, through keen insight, imagination, and daring, often recognized deep paradoxes and expressed them through philosophy, poetry, or myth. A similar example can be found in Hindu thought, where the universe is viewed as a cosmic play (Lila) between good and evil to manifest beauty and majesty. In Gnostic Christianity, too, Lucifer (the “light bearer”)—who fell due to pride—is sometimes reinterpreted as a symbol of rebellion against divine determinism.

The accompanying audio for this article is Swan Lake by Tchaikovsky.